Apple

- Double tap the Safari icon in the dock or tap and hold then select Show all Windows.

- If you’re using a Magic Keyboard (or another keyboard with the globe key) use the globe and down arrow shortcut.

- Look in the sidebar of recent apps on the left, tap or click the Safari icon (if it’s visible in one of the 4 spaces).

- Activate multitasking and tap the Safari icon anywhere you see it amongst your open apps.

- Successful responses based on current feature set: 9/10

- Innovation and addition of new features: 3/10

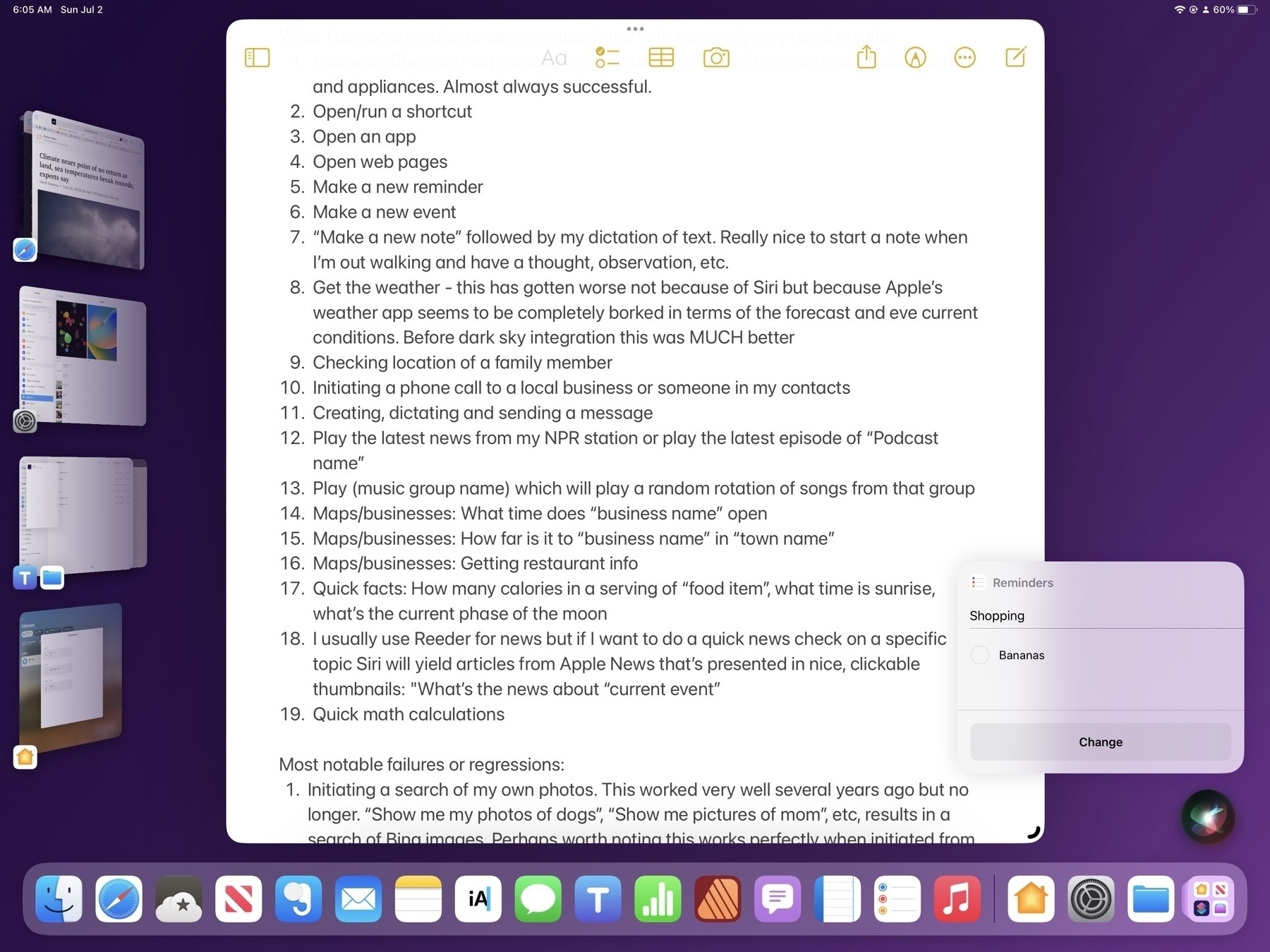

- HomeKit: Checking temperature of various locations with sensors, controlling lights and appliances. Almost always successful.

- Open/run a shortcut

- Open an app

- Open web pages

- Make a new reminder

- Make a new event

- “Make a new note” followed by my dictation of text. Really nice to start a note when I’m out walking and have a thought, observation, etc.

- Get the weather - this has gotten worse not because of Siri but because Apple’s weather app seems to be completely borked in terms of the forecast and eve current conditions. Before dark sky integration this was MUCH better

- Checking location of a family member

- Initiating a phone call to a local business or someone in my contacts

- Creating, dictating and sending a message

- Play the latest news from my NPR station or play the latest episode of “Podcast name”

- Play (music group name) which will play a random rotation of songs from that group

- Maps/businesses: What time does “business name” open

- Maps/businesses: How far is it to “business name” in “town name”

- Maps/businesses: Getting restaurant info

- Quick facts: How many calories in a serving of “food item”, what time is sunrise, what’s the current phase of the moon

- I usually use Reeder for news but if I want to do a quick news check on a specific topic Siri will yield articles from Apple News that’s presented in nice, clickable thumbnails: "What’s the news about “current event”

- Quick math calculations

- Initiating a search of my own photos. This worked very well several years ago but no longer. “Show me my photos of dogs”, “Show me pictures of mom”, etc, results in a search of Bing images. Perhaps worth noting this works perfectly when initiated from a spotlight search, but fails when initiated from Siri.

- Multiple follow-ups, conversational interactions (not really a feature in the first place). This is a specific feature noted for the upcoming release of iOS 17.

- Asking Siri for recent emails or messages for a contact yields inconsistent results and a cumbersome, difficult interface. If I ask Siri to show me recent emails from a contact it usually only shows one email but sometimes will show more and will read the first email subject line asking if I want more read. If I say no the list disappears. There's no way to interact with the found emails.

- The customizable Lock Screen with widgets looks really nice and I imagine will be a helpful addition.

- Interactive widgets (and more placement options of those widgets on the Homescreen).

- PDFs in Notes get some really excellent updates like machine learning that recognizes/creates form fields. For people that use pdf forms or who rely on pdfs for annotating or collaboration, these updates will be really useful. I can imagine this will be really great for students.

- Notes gets cross-note linking which is going to be helpful for some.

- Reminders is getting a new column view and categories for grocery lists.

- Not too surprising, Stage Manager is getting more window resizing and placement options which will improve the experience.

- Files: More customizable tool bar, more complete indexing of file contents for better search results, more column options in list view

- Improved or added support for smart lists, saved searches in apps like Files, Mail, Notes, Reminders, Contacts

- Improved Safari bringing it ever closer to the full desktop experience

- Improvements for Stage Manager and multitasking

- Improvements to virtual memory and background tasks for apps like Final Cut Pro

- While Pages, Numbers and Keynote are all excellent apps, there's more to do to bring them fully in-line with the Mac apps

- As the new app in the Apple ecosystem, Freeform could use some big improvements. This app should really shine on the iPad.

- FCP: To start I'd expect to have the above mentioned missing features addressed: round tripping to and from the Mac, editing from external storage

- Xcode. I'd guess that in 2023 Swift Playgrounds will again be improved but that we'll also see Xcode for iPad. First to what will be described as Xcode lite and then to something closer to the full version.

- Improved Lock Screen that will bring last year's iPhone Lock Screen improvements to the iPad

- Improved widgets, perhaps with new options for interaction

- iCloud Pro?

- More sharing, collaboration and development of the iCloud and app ecosystem into a more complete social network.

- Whatever is happening with the headset I'd expect Apple to market it as the new TV. Talking to family members that all have iPhones, I've recently become more aware of their media consumption habits. It's more individualistic than I realized. It occurs to me that the long-term vision of the headset might simply be the new TV: Movies, shows, sports and as a general purpose computer. I'd speculate that Apple hopes that in 5 years homes with iPhone users will have 1 or more headsets that have replaced flatscreen tvs.

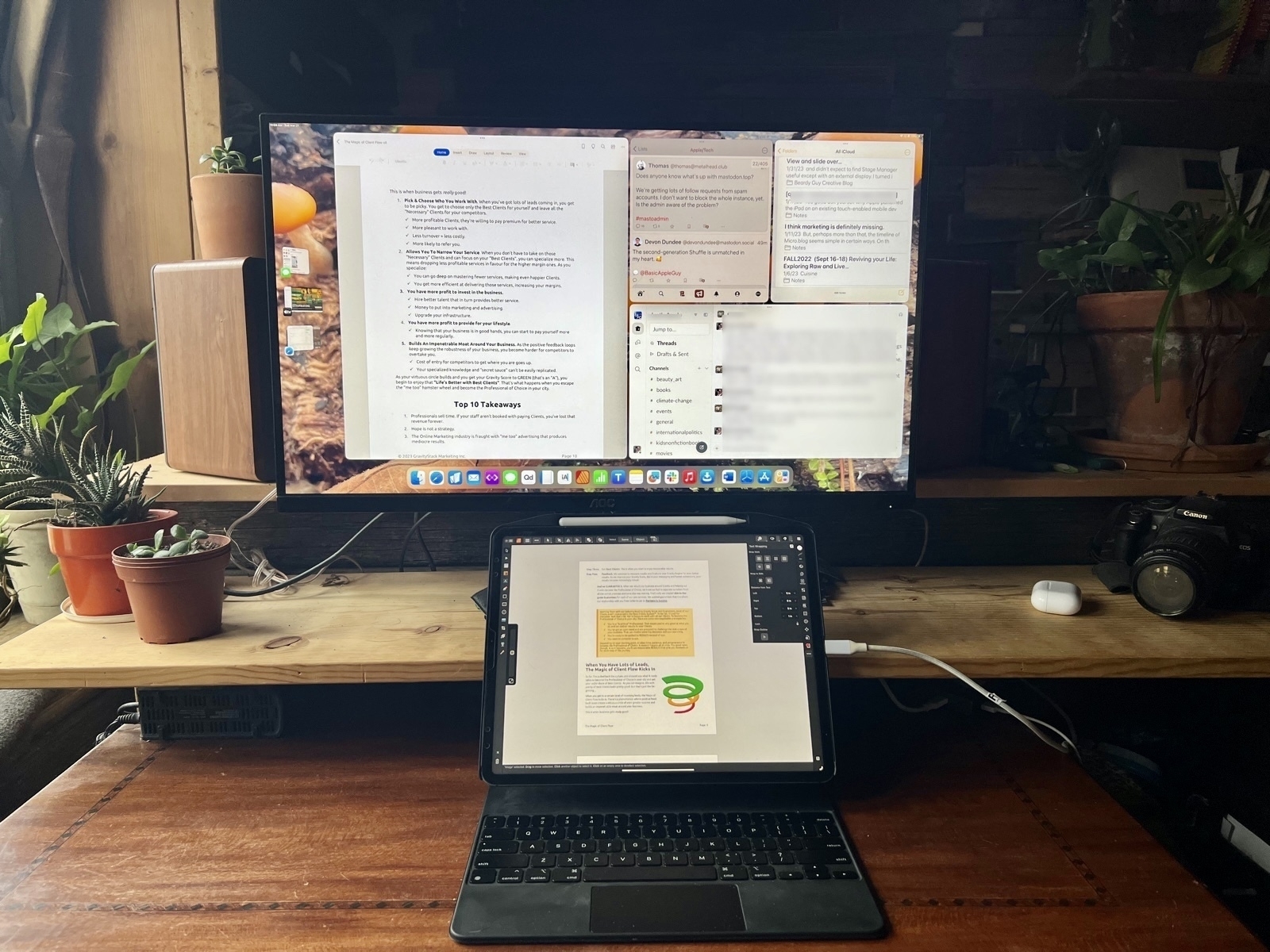

- That FCP does not allow editing off of an external SSD is true but it's a limitation of FCP. LumaFusion and DaVinci Resolve both have this feature

- The lack of some keyboard shortcuts in FCP has nothing to do with iPadOS

- The interaction of FCP with Stage Manager is critiqued but again, this is how Apple has implemented FCP. I've not used DaVinci Resolve but LumaFusion has no problem with Stage Manager on the iPad screen or full screen on an external screen. It's excellent.

- Round-tripping a FCP project from iPad to Mac and back. Again, this is likely a limitation of FCP not iPadOS.

Using the iPad with Stage Manager? Have a lot of Safari windows stacked up? Remember the different ways to activate App Expose to quickly pick the window you want:

Of course this works with any app but I suspect that for many people Safari is one that is most likely to have the most windows open.

I’m finding the new Siri improvements way more useful than I expected. Though a small thing the no “Hey” required really does make for less friction in usage. And multiple follow-up requests without reactivation is also better than expected. Small refinements can make a big difference.

The easy way to move Safari tabs on iPad into tab groups. Rather than individually long pressing tabs to move just drag and drop directly from the tab bar or use the tab overview. Multitouch tap and hold to select multiple tabs then drag into group in sidebar. Why haven’t I tried this before?

iPadOS 17: Improved file content indexing

For iPad users, it appears that with iPadOS 17 beta file contents are more thouroughly indexed and revealed in Files app search results than previously. Some of these were indexed before but some are new. I’m seeing results from text/html/markdown files in app folders for Notebooks, iA Writer and Textastic. Also content search results for pdfs, Numbers and Pages documents.

I love the visual design of Apple’s Weather app on iPhone and iPad. Love it. But it’s always wrong. The only time it’s not wrong is current conditions which is only sometimes wrong.

I’m using Foreca instead. Has excellent widgets including live radar and a one time purchase option. You?

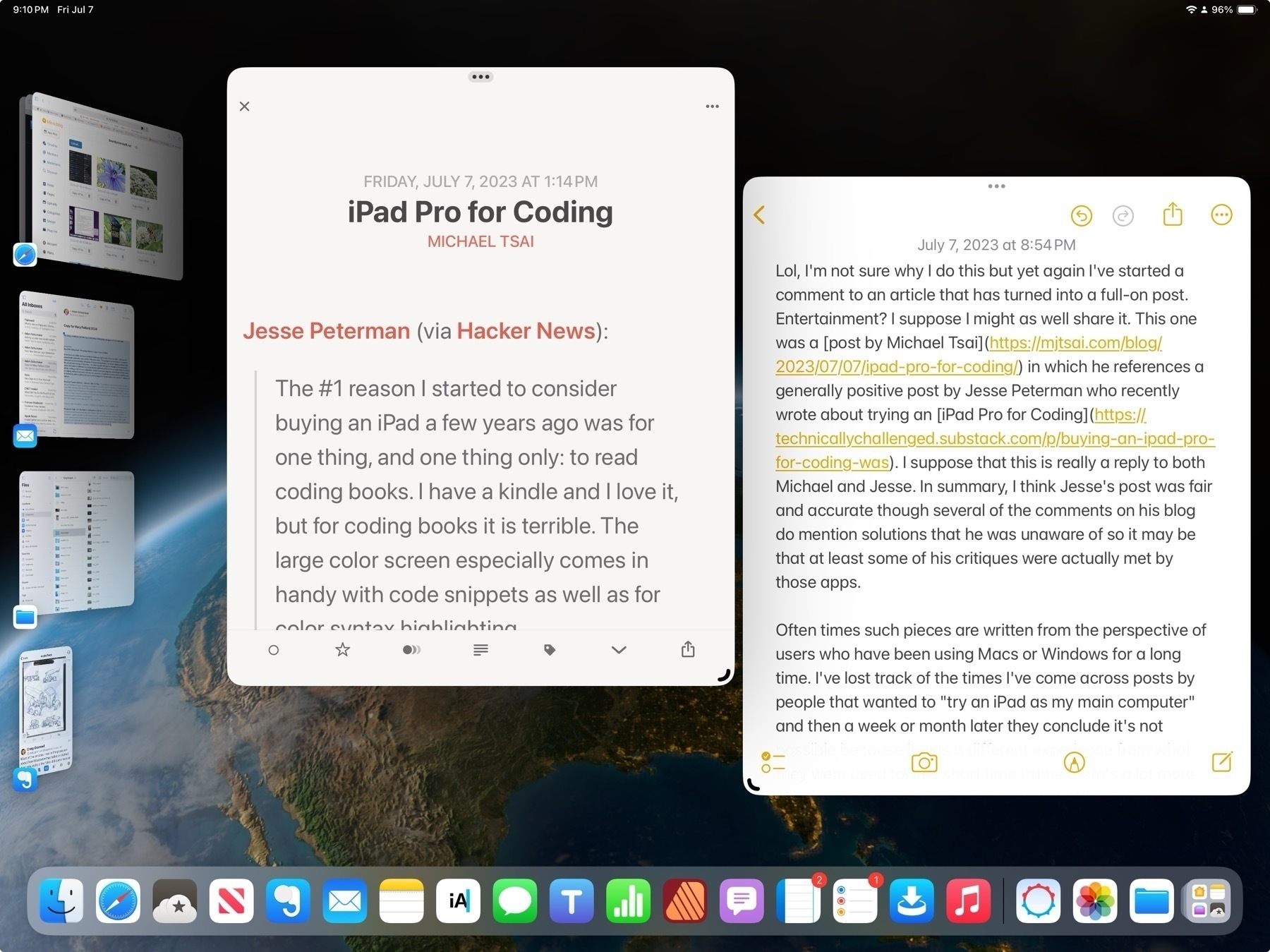

Lol, I’m not sure why I do this but yet again I’ve started a comment to an article that has turned into a full-on post. Entertainment? I suppose I might as well share it. This one was a post by Michael Tsai in which he references a generally positive post by Jesse Peterman who recently wrote about trying an iPad Pro for Coding. I suppose that this is really a reply to both Michael and Jesse. In summary, I think Jesse’s post was fair and accurate though several of the comments on his blog do mention solutions that he was unaware of so it may be that at least some of his critiques were actually met by those apps.

Often times such pieces are written from the perspective of users who have been using Macs or Windows for a long time. I’ve lost track of the times I’ve come across posts by people that wanted to “try an iPad as my main computer” and then a week or month later they conclude it’s not possible because it was a different experience from what they were used to. In a short time frame there’s a lot more friction as a new-to-iPad user settles in. Windowing and multitasking seem to be be the initial primary obstacles but then also, sometimes, needed apps that are not available or if an app is available it’s not an exactly match to the version they’re used to.

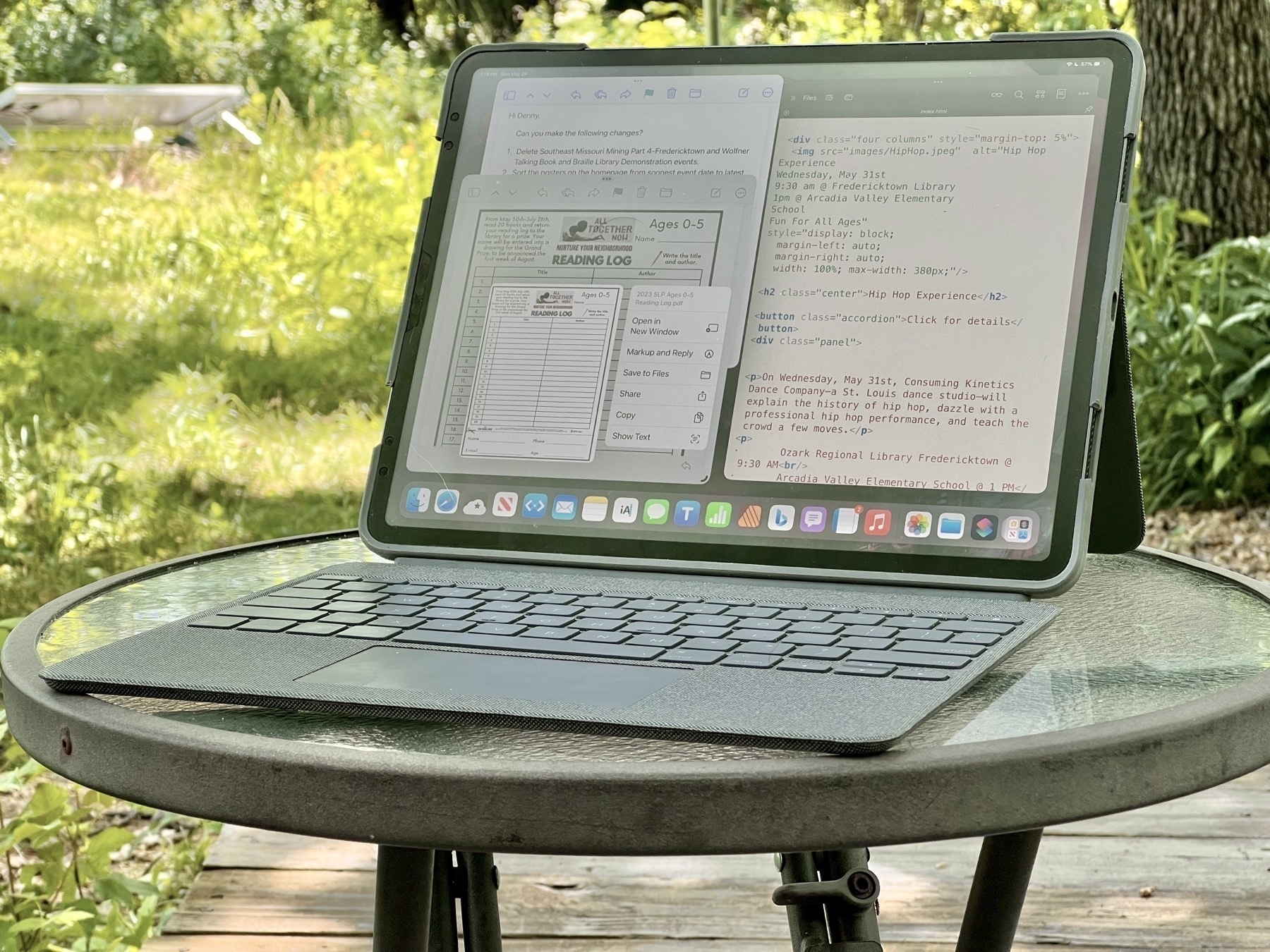

My original iPad docked in Apple’s Keyboard Stand

My original iPad docked in Apple’s Keyboard Stand

I came to the iPad as a long time Mac user (A Color Classic and System 7.6). Like many I bought the first iPad and in those days was happy to use it as a tablet along side of my Mac. But I also bought it with the the Keyboard Stand accessory that Apple sold and within the first few months had found an app, Gusto, that was built for managing/coding websites. It had a very similar feel to Panic’s Coda with a sites window with thumbnails, an excellent text/html editor and a built in ftp client. Well, that gave me my first taste of “using the iPad for real work”. Given that much of my work then (and now) involved setting up and maintaining small, static websites in the old school html/css/ftp way, well, I was actually quite happy with that set-up.

Over the next few years I happily bounced back and forth between the Mac and iPad. In those early days I relied on the Mac for graphics focused work and the iPad for reading, writing, blogging and code. Around 2016 Serif started releasing iPad versions of their apps and that allowed me to switch over another chunk of my work. None of this was planned, I enjoyed using my Mac and iPad together. But when given the opportunity I usually chose the iPad if it was the right tool for the job. By that time I’d learned all of the gestures and with each new year I learned any new gestures, new features, etc.

Using the iPad beyond casual consumption requires mastery of its interface and an interest in taking advantage of what makes it different: the touch screen. This is really key and seems obvious but many seem to overlook it when discussing features, price and limitations. Of course a 13" iPad Pro is going to be quite expensive, its got a large glass touch screen. In some ways it is more limited and these days its battery life is less than the M-based Macs. And yes, it’s heavier than some when you add in the keyboard.

I still keep a Mac Mini around as a file server and as a back-up but sold my MBP back in 2017. I feel a bit like a ninja or a wizard with the iPad. Being able to use it without a keyboard is something I really value. I long ago mastered the many multi-touch gestures that are available and my fingers are always dancing across the glass. I enjoy that experience. But the keyboard is always nearby and about 50% of the time is attached and I’m happily using the keyboard, trackpad and touchscreen together. And still other times I’ve also got an external monitor attached.

We’re 8 years in since the release of the 1st iPad Pro and though the evolution of iPadOS has been too slow for some I’ve found the last three years of features have added up to a refined user experience that brings a more flexible, powerful range of possibilities for anyone that wants to take advantage of a touch screen, modular form factor. Really, at the end of the day, I like to celebrate the fact that we have so many Apple computers to choose from because it also means so many more people get to have the comfortable computing experience that lets them do more. It’s a win for everyone.

If using the iPad is something you’re interested in I’ve written 100+ iPad focused posts.

A check-in with Siri and Apple's Machine Learning

First, to begin with a recent bit of related news from Humane, a company founded by ex-Apple employees that has finally announced it's first product. I bring this up at the beginning of a post about Siri because the purported aim of the product, the Ai Pin, is ambient computing powered by AI. It is a screen-less device informed by sensors/cameras that the user interacts with primarily via voice or a touch-based projection. Humane is positioning the device as a solution for a world that spends too much time looking at screens. The Ai Pin is intended to free us from the screen. It's an interesting idea but, no.

My primary computer is an iPad and then an iPhone. These are supplemented with AirPods Pro, the Apple Watch and HomePods. Of course all of these devices have access to Apple's assistant Siri. It's common among the tech and Apple press to ridicule Siri as stagnant technology left to wither on the vine. A voice assistant that's more likely to frustrate users than enable them in useful ways. The general joke/meme is that Siri's only good for a couple of things and that it often gets even those few things wrong.

But that's not been my experience. In general my experience using Siri has been positive and I've long found it useful in my daily life. I do think there's some truth to the notion that Apple's been fairly conservative in its pushing forward of Siri. As is generally the case Apple goes slowly with careful consideration. I'm not suggesting Siri is perfect or finished. Of course not and yes, there's more to be done.

All that said, I consider it a big win that I have an always available, easy to access voice assistant, that compliments my visual and touch-based computing. I use Siri several times a day with very good results and I’d say that my satisfaction with Siri the voice assistant is pretty high just in terms of the successful responses I have. My primary methods of interaction are via the iPad or iPhone, sometimes with AirPods Pro, using a mix of Hey Siri and keyboard activation.

Here I'm offering just the most basic, 2 point evaluation:

What I use on a regular or semi-regular basis with generally very good results:

Most notable failures or regressions that I've found in my use:

In the tech sphere where the news dominated by "AI" for the past 10 months, most recently ChatAI, large language model integration into web search, Microsoft's Copilot and Google's Bard being the most prominent examples, pundits were wondering, what would Apple do to respond? When would they put more effort into Siri and would it include AI of some sort. Or would the voice assistant be left to fall further behind? The not too surprising answer seems to be that Apple will continue to improve Siri gradually on its own terms without being pressured by the efforts and products of others or the calls from tech pundits.

We view AI as huge, and we will continue weaving it into our products on a very thoughtful basis. - Tim Cook.

Siri the voice assistant is just one part of the larger machine learning that powers features like dictation, text recognition, object ID and subject isolation in image files, auto correct, Spotlight and much more. While Siri is a focus point of interaction, the underlying foundation of machine learning is not meant to be a focus, it's not the tool but rather the background context that informs and assists the user as features found in the OS and apps. Machine learning isn't as flashy as ChatGBT and Bard but unlike those services it absolutely and reliably improves my user experience in meaningful ways every day.

For those that haven't used Siri recently Apple provides a few pages with examples of the current feature list: Apple's Siri for iPad help page and Apple's Main Siri page

How to check your iPad’s battery health | Tom’s Guide

Knowing how to check your iPad’s battery health might sound easy, but it’s actually strangely hard information to find, despite how important that data is. Batteries degrade over time, so it’s handy to know how your long-serving tablet’s faring, or how healthy an iPad is if you’re trying to buy or sell it second-hand.

Be sure to read to the end to get the Shortcut which makes it much easier. Apple really should add the Battery health status to the settings app.

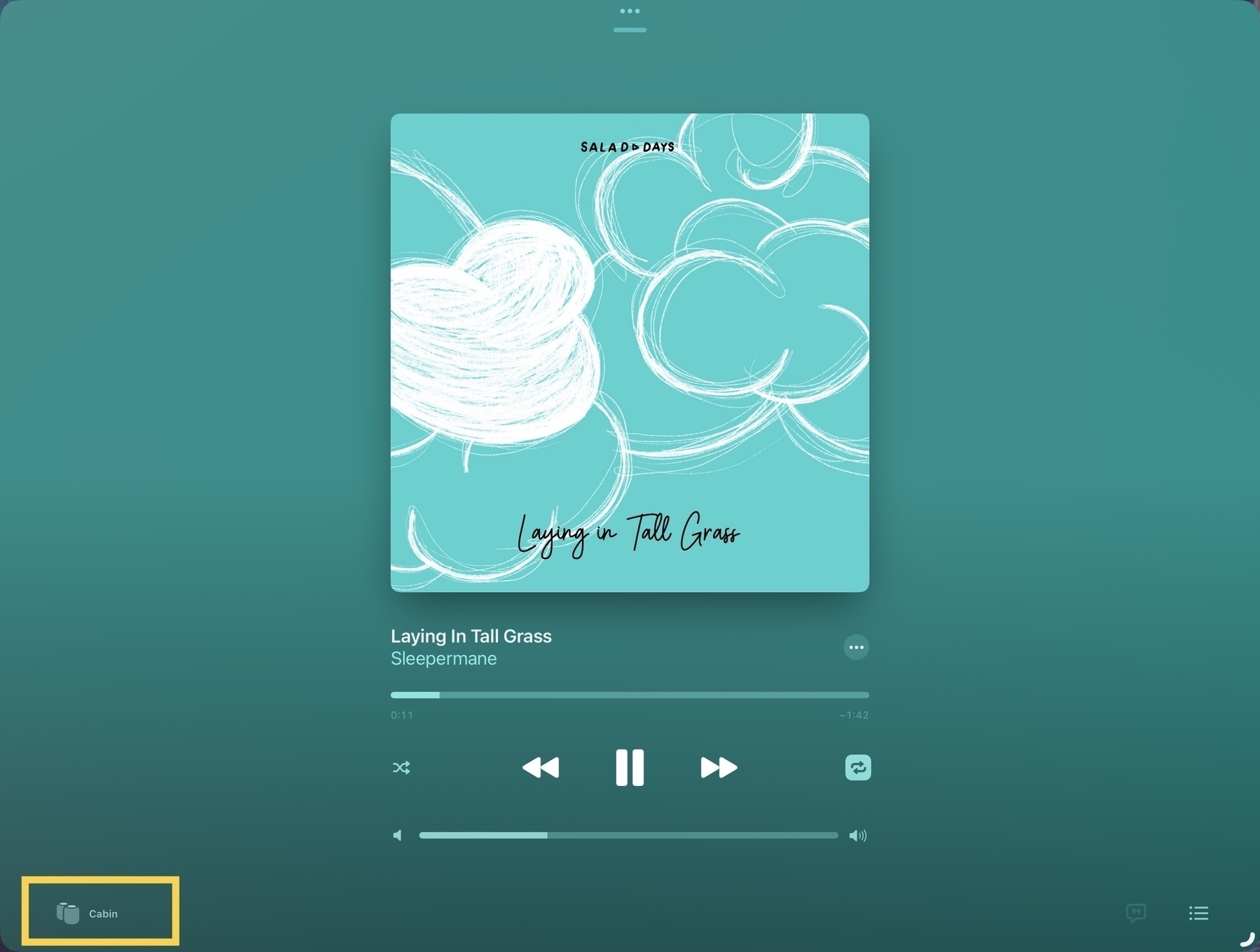

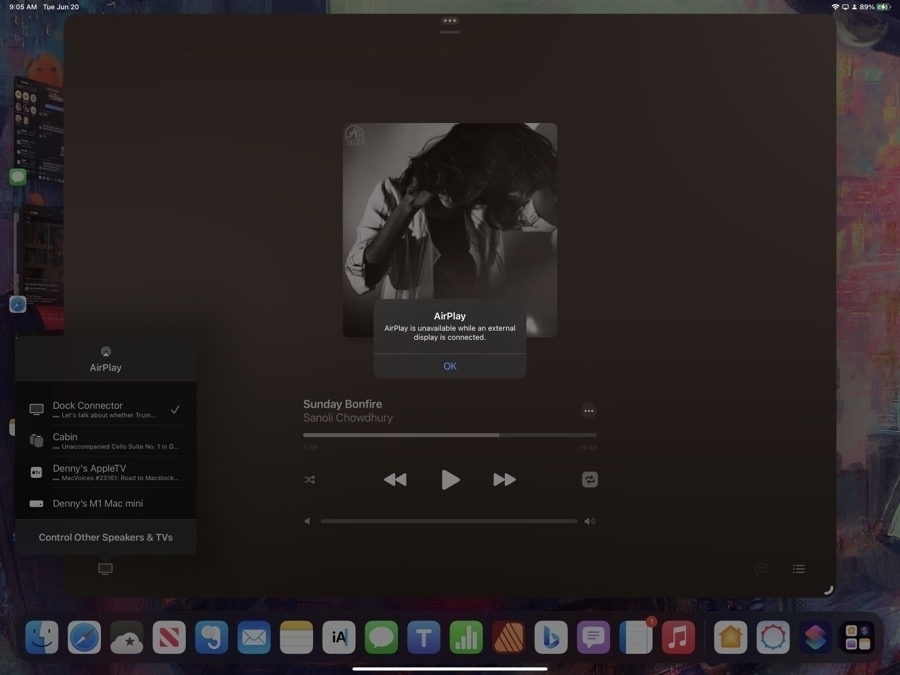

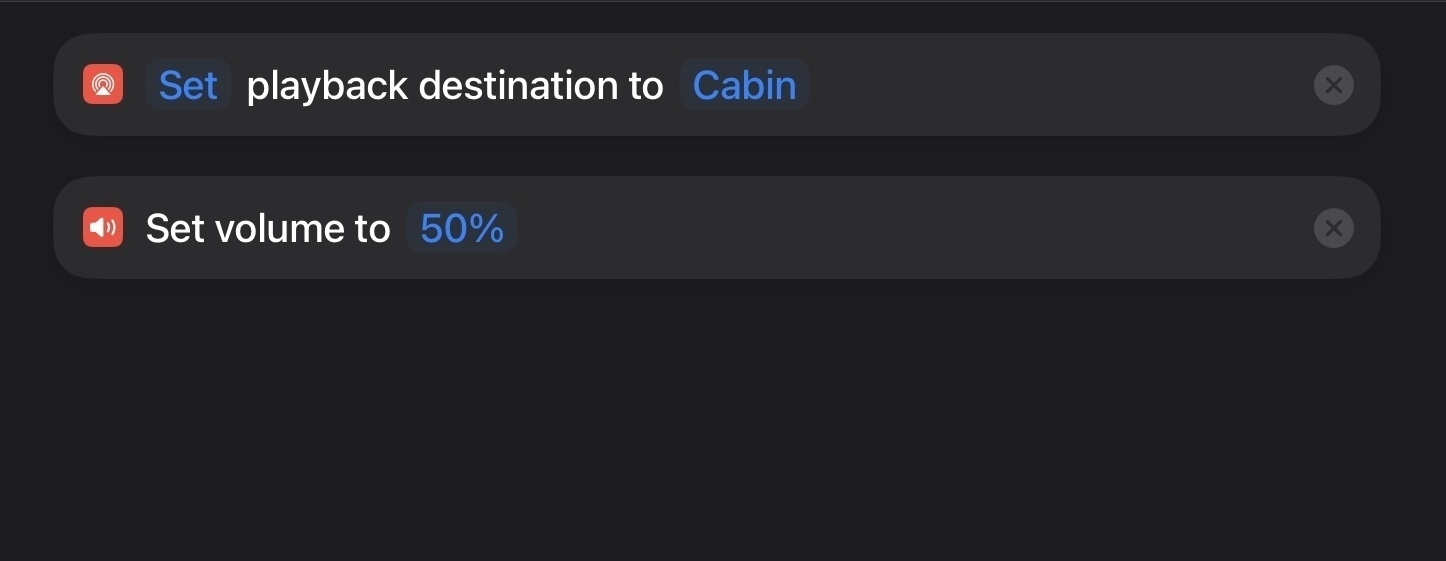

A Shortcut fix for iPad AirPlay to HomePods when using an external monitor

If you're using an M1 or M2 iPad with an external monitor you'll have noticed that the only audio available is that monitor. If there are no speakers or if the built in monitor speakers are lousy you have a couple choices for audio. Of course, AirPods are an option. Bluetooth to an audio speaker. If the monitor has an audio out you could plug in speakers. But HomePods are another option.

It might seem at first that this won't work. When using Music or the TV app, if you select HomePods from the AirPlay widget you'll get an error: "AirPlay is unavailable while an external display is connected."

The solution is a simple shortcut. Run this and you can play audio to your HomePods when connected to an external monitor. If you pause playback for for more than a few minutes you'll have to re-run it because audio will default back to the connected monitor.

Other benefits of using this Shortcut: It seems to connect a bit faster than using the built in AirPlay widget found in apps. When I use that widget in the Music or Podcast app it actually transfers "ownership" of the audio stream to the HomePods. So, really, it's not AirPlaying the stream from the iPad/iPhone to the HomePods but rather the HomePods become the new source device:

When using the Shortcut the iPad or iPhone remains the source device and pushes the audio to the HomePods:

Another benefit when using the Shortcut is bedtime playing of a podcast. By using the shortcut method I can set the sleep timer in the Podcast app from the device. This isn't an option when using the AirPlay widget.

A few thoughts on WWDC23, the Vision Pro and iPadOS 17

It's been a couple weeks since Apple's WWDC keynote and I've been enjoying everyone's excitement. I'm not big on offering a quick hot take as I'd rather take my time in pondering the news.

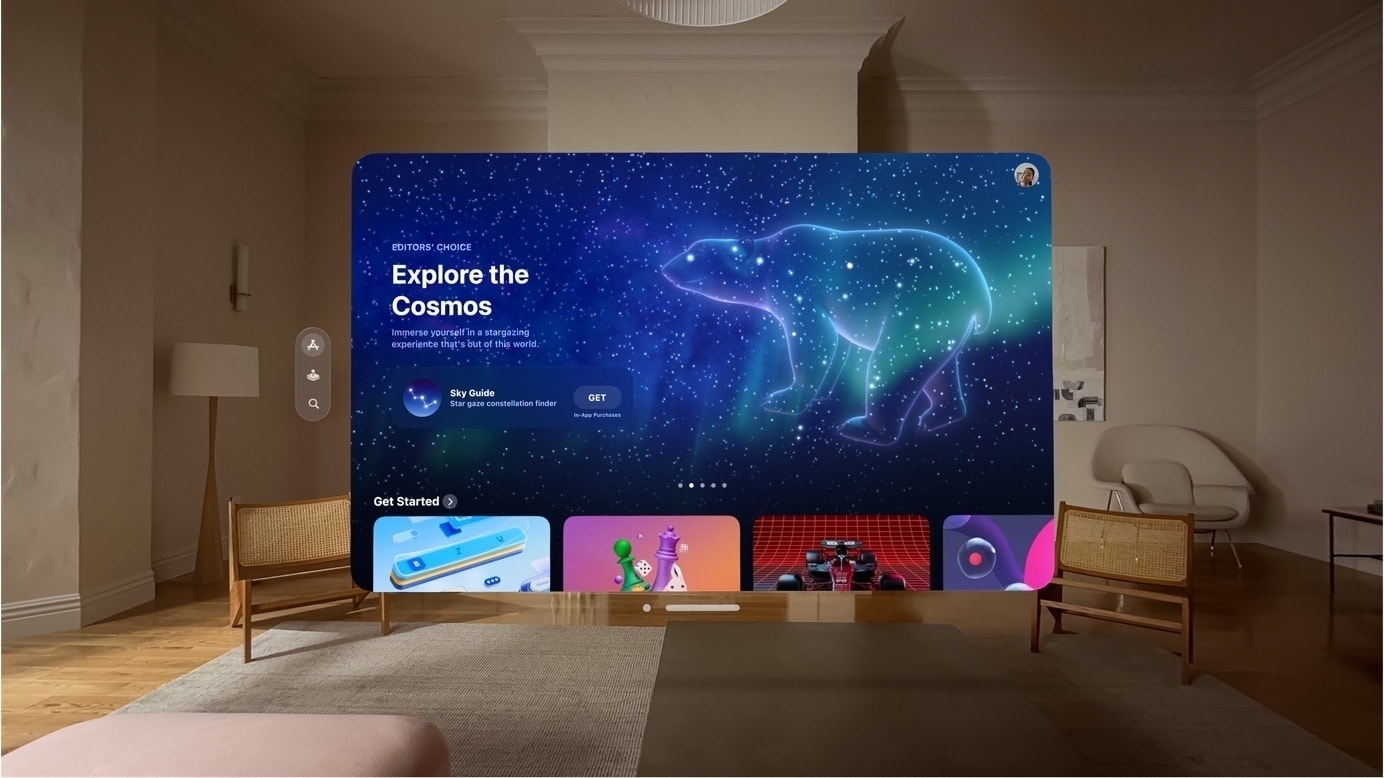

Apple Vision Pro My initial reaction to the Vision Pro was pretty much in-line with what I'd expected based on the rumors. I think Apple's spatial computer will be a success. Will it redefine computing? That might be an overstatement but it seems likely that if all goes to plan it will certainly have a dramatic influence. Initially it will be an added option, an expensive and premium experience for those that can afford it. But given the cost of a MacBook Pro and external display can get into the territory of $3,000+ the Vision Pro, as a computer with nearly unlimited, room-filling screen size seems to be well within the range of Apple's current offerings.

In the run-up to the announcement, much has been speculated about the use-case for this device. Thus far headsets have been focused on virtual reality experiences such as gaming, and, I think, some are using it for certain industrial/training type applications. At least, that's my impression from a distance. I've not paid much attention to it. Meta wanted to expand that out into a virtual world of weird floating torsos for meetings and other, perhaps social events. Again, I've not paid much attention to it beyond initial skimming of the creepy avatars. Meta's attempt hasn't gone far and seems to be stalled. And so it's easy to think that this is a very small, niche market not likely to be a space where broad consumer success would happen, even for Apple.

I think it's clear to most by now that what Apple showed us two weeks ago is intended to be something much bigger. And really, it's not surprising given the time and resources they've put into the product. Of course they're going to offer something unlike what's come before, that's what they do. This is not a VR headset but rather a powerful computer that you wear in front of your eyes. It's more akin to an iPad Pro than Mac in that it will run iPad style apps from the start and it has a windowing system that seems more reminiscent of an iPad Pro with Stage Manager enabled. While the windows have a new visual style unique to the new OS they generally seem similar to the style of windows iPadOS in that they are rounded with more space between elements when compared to the more dense, less rounded windows of Mac app windows.

I also suspect that the Vision Pro will frustrate some Mac users in the same way the iPad has. After the initial flash of excitement Mac power users will run up against an OS and applications that, while visually very well designed are still, at the end of the day, more like an iPad than a Mac. And while iPadOS and apps have all been growing in complexity and capability they are still not quite a Mac. But, more importantly, like the iPad, the Vision platform is based on an interaction model that is not a keyboard and a mouse and some will struggle with that just as they have with the iPad and it's unique gesture based interactions.

That said, one key difference is in the size of the windows which will give Vision computers some breathing room. The iPad form factor has always been smaller, maxing out at 13". Even as the OS has become more refined and powerful, it's still a small screen and I think that's been a problem for Mac users that are used to screens 14" and larger. But Vision computers won't be limited in that way. Users will have more room to work and that will relieve some of the sense of confinement they feel when working with the smaller screened iPad. I suspect the experience of using a Vision computer, compared to a Mac, will be simultaneously amazing and frustrating for some.

Another key difference, Vision computers will also offer a gaming and entertainment experience that goes far beyond what a traditional computer or iPad can offer. That, combined with a huge, iPad sized App Library plus new apps designed for the platform, would seem to provide the kind of foundation that might lead to a slow and steady adoption.

We'll still be using smart phones, tablets, laptops and desktops for a good long while. But in 3-5 years we'll begin to see the roll-out of more models of Vision computers in higher volumes. With early bugs squashed, a larger App Library, an improved, higher volume production line, the prices will have started to come down and the general public will have gotten more used to the idea of such a device and what it offers. My guess is that the least expensive version of the 2028 model will be the hardware equivalent to this first offering but at a much lower price. Thinking about it in the context of a long game, in the 4 to 6 year time frame, it seems reasonable to suggest that Apple's headset line-up will have come down to entry level models in the range of $1,500 to $2,000. Still above a budget Mac or iPad, but more affordable.

We'll see. At the moment there seems to be a lot of potential and excitement for this new category of computer. There's plenty of time yet for more hot takes, flushed excitement to be followed by flushed frustration.

Next, iPadOS 17. Beyond the Apple Vision Pro, my primary interest is what's coming to iPadOS. Probably most notable:

I've been really satisfied with iPadOS 16 and the improvements coming with the above features and the many other enhancements in iPadOS 17 will only make it better. The improvements to Stage Manager will likely quiet some of the most vocal complaints of that feature heard over the last year. In other words, the iPad is still not a Mac but some will find it more usable as it more closely approximates the macOS experience they want.

There was no mention of Xcode for iPadOS so that will be a complaint for another year.

With the Stage Manager improvements and the release of FCP and Logic Pro for iPad just before WWDC, it seems likely that we'll see a 15" iPad Pro or iPad Studio sometime in the next year. And I would think that either this year or next year's iPhones will also have cameras/features added for both Vision's 3D video as well as for capturing video for FCP for the iPad. Is there enough bandwidth for that sort of real time capture?

Those of us already happy with the iPad will be even happier.

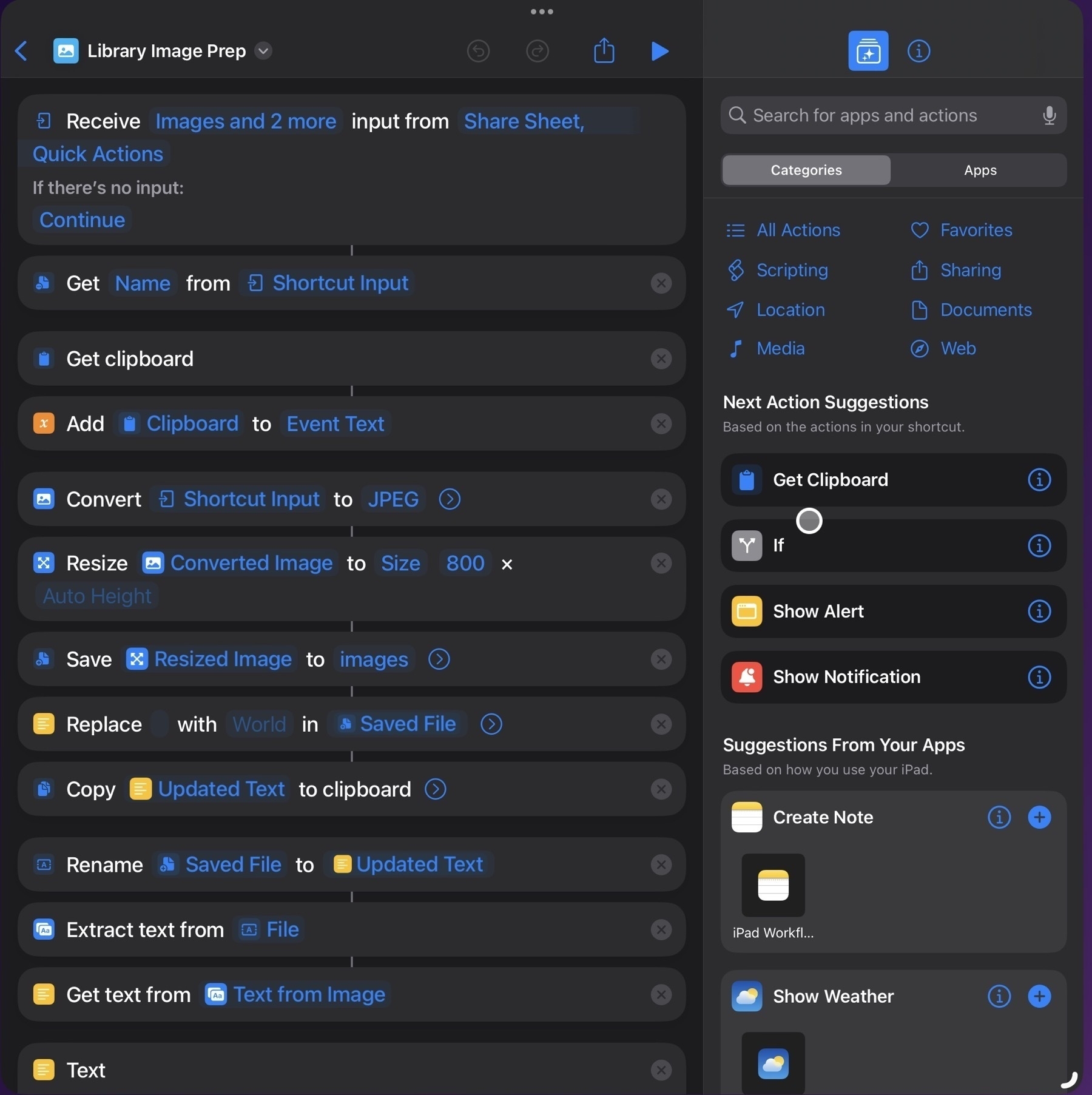

iPad Workflow- Using Shortcuts to process images for the web

Convert image or pdf, resize, save, rename and extract alt text with two taps

One of my regular tasks is updating the front page of our regional library website, either adding upcoming events or removing finished events. Our front page is a grid of event flyers with an expandable accordion under the flyer that contains a text description of the event, usually very similar to the flyer itself. A staff member emails the flyer with the accompanying text.

Previously my process was to have Mail and Textastic open side-by-side for easy copy/pasting. The attached flyer images are a mix of pdf, jpg or png. In the past I would have saved to Photos then selected them all and used a Shortcut to export to the website’s image folder in Files, converting them all to jpegs at a preset size and quality. Fairly quick and easy. I still had to rename the files and then I would proceed to copy the event text from the email and paste it into the html, manually copying or typing the name of image files into the html.

But in recent months I’ve added new steps to the shortcut. Now the shortcut will first process text in the clipboard, turning it into a variable, then it extracts the text of the flyer and adds it to the clipboard. Then the shortcut puts all the text together into a field which it copies to the clipboard. I’m not sure why I didn’t add these steps in earlier. This is the third revision of this shortcut, each time new steps have been added to streamline the process.

The only downside is that I can only do one image at a time which isn’t too bad as the typical email only has 2 to 4 such files. Overall I’m still saving far more time with this approach.

So, here’s how it flows from my perspective as a user: I select the text of an event in the email sent to me and copy it. Then I select the image or pdf in the message and select share then select the shortcut. The shortcut runs and brings Textastic to the forefront. I just scroll in my html document to where the new event text/photo is to be inserted then paste. Done! I still have to spend a minute there adding a couple of paragraph tags and a quick clean-up any errors in the text extraction but it works pretty well and the whole process only takes a few seconds after I paste.

So, while the original process was 6-8 minute back and forth between Mail and Textastic with bits of text editing, copy/pasting in Textastic, the new process is a text selection and copy, 2 trackpad taps in Mail then a paste. About 20 seconds.

Shortcuts is a fantastic timesaver for repetitive tasks and it’s an app I keep finding new ways to use.

An iPad Pro Revival

I don’t often speculate about upcoming Apple announcements but I’m going to make an exception with this post. And it’s nothing complicated, just putting a few pieces together. Most of it is probably pretty obvious to folks who have been paying attention Apple news over recent months. Before I continue, for anyone not familiar with my blog, I’m a full-time, very satisfied iPad Pro user. I’m not someone who struggles with the iPad, not someone who longs for macOS on the iPad. For me, iPadOS sings and my interest is in seeing what Apple does to refine the iPad experience rather than any hope of a macOS to iPad face-plant, er, transplant.

May 29, 2023. We’re a week past the release of Final Cut Pro and Logic Pro for the iPad. Apple first announced the release on May 9th and it took everyone by surprise. It’s always fun when they can still surprise everyone! Boom. And, interesting that such a significant announcement is done by a press release a month before WWDC. For the most part the reviews have been very positive. The two most repeated critiques of FCP for iPad: No round tripping to and from the Mac and no editing off of external hard drives. But given this is the initial release, it seems reasonable to expect that these two features will be added before long.

We’re one week away from WWDC 2023. The rumors of a VR/AR headset have been buzzing for the past year. In recent months it’s been rumored that the headset would be running many of Apple’s apps in an iPad-like form. Many assume the headset is now a certainty that will be announced at WWDC 2023.

While much of the excitement about WWDC 2023 has centered on speculation about the headset, it’s OS and features, I’m curious about the connection that might exist between the headset and the iPad or, more specifically, the potential commonality between the two operating systems. Given the rumor that the headset will, essentially, be running iPad apps, this seems a relevant question to consider. Given the need for energy efficiency, it would make sense that the OS running the headset would be more like iOS or iPadOS than macOS. If true, well, then there is now a new category of hardware that runs on the iOS family.

Another rumor, discussed far less, is a larger iPad Pro, perhaps 15 or 16". With the release of FCP and Logic Pro for iPad, a larger iPad Pro would make a lot of sense. And of course with these new pro apps, it also starts to seem inevitable that Xcode for the iPad will be released. We’ve seen some big improvements to Swift Playgrounds in the past couple years but still no Xcode.

Last year we saw quite a few improvements to iPadOS and the stock iPad apps. Much of this was overshadowed by a few prominent pundits that disliked the implementation of Stage Manager. I’m not going to dwell on that other than to say that the negativity around the feature is greatly overblown. Though not what some wanted, many of us use it and actually like it. It’s certainly made my iPad experience much better, allowing me to use 3-4 apps at once. It’s been a real and measurable productivity boost for the work I do. At the very least, it is Apple’s first step towards an improved multitasking experience on the iPad.

But looking at the improvements brought to iPadOS over the past 2 to 3 years, we have an OS that is has steadily matured. The Files app has had many previously missing features added and is now fully functional, nearly on par with the Mac Finder. Stock apps like Notes, Mail, Safari, Reminders have all seen significant improvements.

Putting the puzzle pieces together If the rumors are true it would seem that Apple has also been developing the headset and its OS for awhile. For several years they’ve been publicly promoting Augmented Reality and LiDAR with new hardware and software features on the iPhone and iPad. Tim Cook has been outspoken of his support of AR during that time.

In a week Apple will begin to provide more details about where this journey is going and how they expect these devices to work together. Given previous years development of the larger Apple ecosystem, it seems likely that not much has been left to chance. Apple has a well thought out plan that it’s been following. It would seem a given that the time and energy put into the development of LiDAR and AR in iOS and iPadOS has been a part of the process of developing the OS and hardware for the headset.

Of particular interest to me: how will the features and technology put into the headset OS overlap or come back to iPadOS?

My expectations and hopes for the iPad in 2023 and 2024 is that Apple will continue to fill out and refine the OS and the default apps. I think most of this list is just an obvious continuation of what we’ve already seen.

What I’m hoping or expecting to see in terms of iPadOS and apps:

An iPad Pro 15 or 16". Along with this I’d hope/expect to see other iPad accessories as Apple broadens the iPad platform. A new version of the Magic Keyboard. The iPad Pro needs better battery life, I’m hoping we’ll see this in the form of a new Magic Keyboard with an integrated battery that can charge the iPad. I imagine the Brydge form factor but with more ports. I can imagine this combination being the iPad Studio. Larger iPad, FaceTime camera moved to the long side, M3, detachable Magic Keyboard that boosts the iPad to 20 hours of battery life. I’d expect it to have the same battery life as a MacBook Pro but weighing in a bit more as it would be 2 batteries. Also, maybe a redesigned Magic Keyboard for the current line of 13" iPad Pro and a new M3 13" iPad Pro.

In short I expect that Apple will double down on its commitment to the iPad platform: hardware, iPadOS, Pro apps and accessories.

Last, a few words on the larger Apple Ecosystem and the new headset as the new TV

Pundit fact check: Final Cut Pro and iPadOS

Yet another installment of an “Apple pundit ignores the facts to write a clickbait story about the limitations of iPadOS”. It’s a bummer that they just make stuff up to fit their preferred narrative. Filipe Espósito over at 9to5Mac in his work of fiction, Final Cut for iPad highlights iPadOS limitations:

This week, Apple finally released Final Cut Pro and Logic Pro for the iPad – two highly anticipated apps for professionals. While this is a step in the right direction, these apps highlight the limitations of iPadOS.I tried using Final Cut for the iPad, but… I wasn’t expecting the iPad version to have all the features available on the Mac in version 1.0. However, the limitations go beyond what I was expecting. And some of these limitations are due to how iPadOS works.

The “limitations” he attributes to iPadOS:

Using LumaFusion on the iPad with an external display

Using LumaFusion on the iPad with an external display

Okay, background export of a movie. To some degree this is a limitation of FCP but also I suspect iPadOS. Certainly Apple made it a choice to not allow it. Using an M1 iPad Pro with 8 GB of memory it’s possible to do a background export with LumaFusion. I just did it. That said, it does fail if I try to do many other tasks with LumaFusion in the background the whole time. I can hop to Notes or Mail or Safari with no problem. But if I were to leave an export in the background and try to hop from Notes to Mail to Safari then back to the export it will have failed. I’d guess that an iPad with 16 GB of memory would do better. And I’d guess that more could be done with iPadOS to allow for apps to be given priority for such background tasks.

Espósito concludes, “And with all these limitations, I’ve given up using Final Cut on the iPad. I’m sure a lot of first-time video makers will have a great time using Final Cut on the iPad. But for those professional users, having a Mac is still the way to go. Hopefully, iPadOS 17 can put an end to some of these limitations.”

He seems to consider himself a “professional” but I would think a professional journalist would have more regard for the truth. Ignoring or distorting the facts to fit a narrative doesn’t make much of a case that one’s journalism is professional.

Why are Mac users so unhappy with their Mac?

For at least a couple years the Apple pundit mantra has been “the iPad isn’t pro unless Apple brings its pro apps to it”. Well, Apple has begun that process last week with the announcement of Final Cut Pro and Logic Pro for iPad. As I expected the primarily Mac using pundits are moving the goal posts. Here’s one example that popped up today from Dan Moren in his Stay Foolish column at Macworld, Final Cut Pro changes everything and nothing about the iPad:

This past week, Apple once again took a step towards the idea of the iPad as the modern-day computer replacement with its long-awaited announcement of Final Cut Pro and Logic Pro for the platform–but is it too little, too late?

“Is it too little, too late?” See, right off, it’s not enough. For long-time Mac users nothing Apple does with the iPad will ever make it explicitly a Mac and so the goal posts will always be shifting. Moren mentions Xcode as another missing pro app. In recent years Apple has added new features to Swift Playgrounds, making it a more capable app and I suspect that Apple will, eventually, bring Xcode to the iPad which will move the iPad closer to being complete. But that won’t be enough either.

Hopefully Apple will add the audio input/output features podcasters like Federico Viticci have been asking for. But that’s a very tiny group of users and while it will satisfy that particular need, again, it won’t be enough. The goal posts will move again and again.

Jumping right to the end of Moren’s article, he suggests an iPad that shifts over to being a Mac when connected to a keyboard:

The idea of a device that works as a Mac while connected to a keyboard and an iPad while detached might seem like an unholy Frankstein’s toaster fridge to some, but after 13 years of the iPad, I’d argue that people are pretty comfortable with going back and forth between two (or more) separate devices with different interfaces. Why not find a way to consolidate them?

This is really is what Mac users want. They want a touch screen Mac and evidence thus far seems to indicate that they’ll never be happy with iPadOS. Which is totally fine, it’s not for them. But perhaps they should just move along and be happy with their Macs. And yet, they continue to linger on the iPad. It’s almost as if their Macs are incomplete, not quite enough for them. But they seem stuck between devices that they’re not quite happy with.

Moren writes:

What we’re all looking for, ultimately, is the right tool for the job.

Mac users contend that that tool is the Mac, that it is the more complete computer. My suggestion and hope for them is that they can just accept the Mac and move on. Be happy with your Mac. When you need a portable touch screen be happy with your iPhone. Or add in an iPad Mini for your content consumption. Not every device is for you.

I’ve written here many times about my gradual transition to being a full time iPad user. It was gradual and complete. I learned iPadOS and became comfortable with it. With each new iteration of iPadOS I’ve been more satisfied as new features were added. But throughout that time I’ve generally been satisfied, no, delighted by the iPad. I don’t spend my time longing for the iPad to have Mac features or to have it be a Mac. I wish the same for Mac users, that they can learn to be satisfied with what they have in the Mac and not spend so much time wishing for a different computer.

A few thoughts on FCP-Logic on iPad...

I’m generally not big on hot takes so gave myself a few days to consider before attempting a blog post. As for the news of Final Cut Pro and Logic Pro for the iPad, well, fantastic! Personally, I don’t do much video editing. I’ve currently got a personal/family project going in LumaFusion. My next project might be in FCP just for the fun of learning it. Way back around 2001 -2005 I spent some time doing video work and learned FCP but I’ve not had much use for it since then. I’m looking forward to giving it a go.

But to the larger question of the apps, the platform and the response thus far, as I’ve written about before the loudest complaints about the lack of Apple Pro apps for the iPad seems to come from Apple/tech focused pundits, podcasters, and YouTubers. Some in the first two categories have already admitted in their hot takes on the new release that the don’t actually use those two apps so… 🙄 I expect they’ll keep complaining about the lack of Xcode even though most of them also do not use that.

As for the Apple/tech YouTubers I predict that it will shake out like this: those that have very expensive FCP desktop set-ups will keep using those most of the time for most of their work. Why wouldn’t they if they already have invested so much in a desk-top based workflow? But many of the folks that prefer a mobile workflow away from the desk will try out FCP for iPad and many will use it, prefer it. And of course there will be those that loudly share how it’s not good enough or how it’s still held back by iPadOS. 🙄

Other categories of users: iPad first folks that have been happily using LumaFusion. Some of them will switch over to FCP but others will stay with LumaFusion because it allows editing projects using media from an external drive. LF is pretty feature rich and I suspect many of its users will remain with it.

Then there are the DaVinci Resolve users. It’s relatively new on iPad and I suspect it’s user base consists of folks that were/are also serious users of the desktop version of DaVinci Resolve. They’ll stay with DaVinci Resolve. And some are probably LumaFusion users that are trying out DR as an experiment. They’ll probably also try out FCP and some of them will end up switching. I’m guessing these folks are kind of up in the air at the moment.

FCP/Logic on the iPad don’t seem to be “lite” versions but full versions with a touch-adapted interface. Some features are missing from FCP like round-tripping and editing from files on an external drive will likely be added in an update. But also, these apps seem to be aimed at younger users that are focused on creating for social media like TikTok, Instagram, YouTube. I suspect that there will be some solid adoption there.

In it’s first promo video Apple is touting fast turn-around, quick production on the iPad using it as both camera and editor. For some this will be attractive.

But thinking about the Continuity camera feature that allows for an iPhone to be used with a Mac for live FaceTime calls, I can imagine a new feature with iOS 17 where such live video allows for capture from iPhone to an iPad running FCP. This would seem to be a pretty attractive feature in certain scenarios. But the point is this is just the first shoe to drop in FCP for iPad, new features will come and given Apple’s track record of ecosystem integration, I can imagine we can expect to see them offer up features like the above.

I’d guess it won’t be too long before we see a new version of FCP for Mac that further leverages the hardware ecosystem with round tripping from iPad to Mac and adding in the iPhone as a live camera feed for Mac FCP as well. Again, just my speculation.

I’m glad to see Apple deliver these apps if for no other reason then it might dampen some of the continued complaining from the primarily Mac using pundits. It also boosts the notion that Apple is committed to the iPad as a platform. Mac users not happy with the iPad should just move on. Those that want to use the iPad as their computer can continue doing so but with added assurance that Apple continues to take the iPad seriously.

A few related links of note:

iJustine actually had some hands on time: her video

Dylan Bates, a Final Cut Pro YouTuber offers his thoughts FCP for iPad. Not surprisingly, there were several FCP using YouTubers offering their takes based the above linked promo video and Apple’s website.

And Jason Snell at Six Colors offered his thoughts

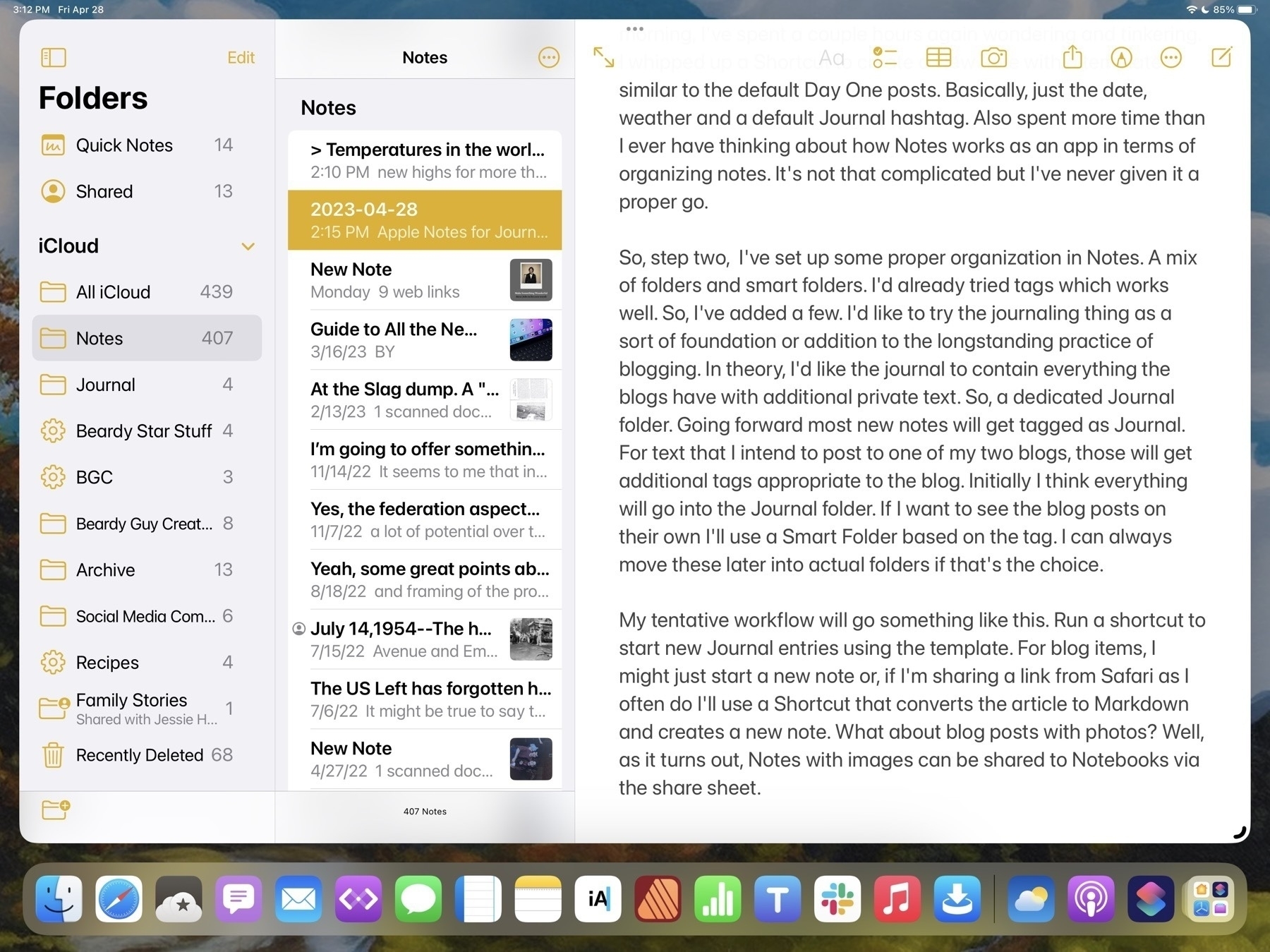

Apple Notes for Journaling and Blogging?

As is common with many Apple nerds, I like to play with text apps. My primary purpose is blogging which has also served as a journal of sorts.

For quite a long time I used ByWord for this. Then Ulysses then iA Writer. iA Writer stuck longer than almost anything else. Then a brief dalliance with Obsidan, Taio, and Notebooks. I’ve spent the past few months with Notebooks which has served pretty well. Review here. The only problem there is that it continues to be somewhat wonky. It’s a great app but often feels buggy. So I’ve been using it as a partner with iA Writer. Where Notebooks shines is in adding images for blog posts. I can copy a single or multiple images from Photos then insert into a Notebooks draft. Not only does the app downsize from full quality but inserts each as a Markdown link. If I move the file to a folder it also moves the images to a corresponding sub folder. It works fantastically.

So, why mess with it if it’s working? Yesterday in the Mac Break Weekly podcast Andy Ihnatko recommended Day One for journaling. I’d never tried it. There’s a free option so I downloaded it. My thought being, hmm, perhaps I could have a private journal in addition to blogging? Okay, will try it. Then thought, hmmm, I could also use this for blogging. I always gravitate to blogging.

I spent an hour with it and loved it. All except for the fact that using the arrow keys while writing/editing text does not move the cursor up or down, left or right, but navigates the files. Ugh. Well, that’s a show stopper. No setting I could find to change it. But so much to like about the app other than that. Edit to add that upon trying this again today, well, it works as expected. Unless it was something wonky with my keyboard I’ll have to say it was user error. 😬

The free version does have other limitations such as no sync between devices, only one image per post, only one journal. So, with these things in mind I started thinking again about Apple’s Notes app.

In the past I’d thought to try to make Notes work as a part of my blogging process. It didn’t quite take. So, this morning, I’ve spent a couple hours tinkering. I whipped up a Shortcut to create a new note with a template similar to the default Day One posts. Basically, just the date, weather and a default Journal hashtag. Also spent more time really looking at the organizing possibilities of Notes. It’s not that complicated but I’ve never given it a proper go.

Step two, then, was to set up some proper organization in Notes. A mix of folders and smart folders. I’d already tried tags which works well. So, I’ve added a few. I’d like to try the journaling thing as a sort of foundation or addition to the longstanding practice of blogging. In theory, I’d like the journal to contain everything the blogs have with additional private text. So, a dedicated Journal folder. Going forward most new notes will get tagged as Journal. For text that I intend to post to one of my two blogs, those will get additional tags appropriate to the blog. Initially I think everything will go into the Journal folder. If I want to see the blog posts on their own I’ll use a Smart Folder based on the tag. I can always move these later into actual folders if that’s the choice.

My tentative workflow will go something like this. Run a shortcut to start new Journal entries using the template. For blog items, I might just start a new note or, if I’m sharing a link from Safari as I often do I’ll use a Shortcut that converts the article to Markdown and creates a new note. What about blog posts with photos? Well, as it turns out, notes with images can be shared to Notebooks via the share sheet.

Any post, with or without a photo can be easily shared from Notes to Notebooks. So, once I’ve got a post ready for sharing I’ll just send it to Notebooks, hop over to iA Writer which has direct publishing to Micro.blog and Wordpress and which has access to my Notebooks folder. With a tap I’ve got the post open then another tap to publish. So, this workflow starts in Notes, ends in iA Writer and I have a copy in the Notes app as well as a discrete Markdown file in iCloud. Exactly what I want.

Downsides? Notes does not have built-in support of Markdown. Apps that do have that support provide convenient one-click formatting or keyboard shortcuts. But it's not all that hard to type the Markdown code when needed as it's designed to be simple. Notes tries to do fancy preview links which are nice but not what I want. I don't think there's an option to turn that off. But just pasting a url works.

Editing to add that after originally posting this to the blog someone on Mastodon chimed in that Everlog is another nice journaling app with Markdown support. I'm trying it out and it is excellent and doesn't have the flaws that Day One has. Now that I've got this handy-dandy Notes based system I'm not sure though that I want to abandon it.

My Affinity Publisher for iPad Review

Serif has set a high bar with the Affinity apps on the iPad and Publisher allows all three to work together seamlessly. And at a time when Apple pundits continue to doubt the potential of the iPad as a powerful tool for creative work, Serif demonstrates what is possible.

If only those pundits could occasionally step away from churning the rumor mill for a bit they might actually discover there are still innovative…

Apparently debunking iPad misinformation has become one of my hobbies. Sometimes I’m able to just walk away but the lure of “someone is wrong on the internet” is strong. So, here I am. The latest is Michael Gartenberg at Business Insider.

These articles really are just filler text for clicks, all repeating the same narrative and offering little in the way of actual thought. And they get paid for this. Let’s get this over with…

Apple Pundit: The iPad is Doomed

Apparently debunking iPad misinformation has become one of my hobbies. Sometimes I’m able to just walk away but the lure of “someone is wrong on the internet” is strong, so, here I am. The latest is Michael Gartenberg at Business Insider. Of course, it begins with a pure link-bait title: I Was a Die-Hard Apple iPad Fan. Not Anymore — Here’s Why. . It’s behind a paywall but you can use Safari’s Reader Mode to get around that. Not that I encourage you to give them the click. I’ve got full quotes below.

The article came to my attention when I was browsing the iPad hashtag on Mastodon. I found Michael’s post via another user’s response. I engaged him but, like the article, found his responses lacking substance. These articles really are just filler text for clicks, all repeating the same narrative and offering little in the way of actual thought. And they get paid for this.

Let’s get this over with.

I still own an iPad Pro, but I hardly ever reach for it. It's a cool device and significantly better than what was introduced in 2010. At the same time, it's a jack-of-all-trades but master of none, so I can no longer justify its existence as part of my ecosystem.

Okay, Michael admits that he hardly uses his iPad. In my exchange on Mastodon I pointed to this as evidence that, perhaps, if he’s not regularly using the device it’s also possible that he’s not keeping up with the new features. Other Apple Pundits often complain about missing features that are not actually missing and are often easy to find if given minimal effort. But he assured me that he’s very familiar with iPadOS.

Also note his mention of it being a “jack-of-all-trades” but master of none. In this context it would seem he’s referring to the iPad as a general purpose computer to which I would respond: Yes, exactly. We’ll get back to this later but he’s not really clear on what he actually means here.

One of the iPad's biggest issues is that its hardware is increasingly overpowered relative to the apps available for the device. With each new iteration, the iPad has gotten faster and more capable, but the quality and quantity of iPad apps have not kept pace, despite Apple's promises of "desktop class" apps for it.

I believe he’s referencing last year’s WWDC when Apple introduced iPadOS improvements that would enable new options for the toolbar in apps. Apple’s own apps such as Pages, Keynote, and Numbers now take advantage of this as do some third party apps. It’s up to developers to add these new features to their apps.

So iPad users, like myself, are paying a premium for hardware that is far more advanced than the software we are able to run on it.

I’d be curious to know what apps Michael actually wants to use that are unavailable. What I see of Apple pundits is that many seem to earn their living writing or talking into a microphone. Are they also film editors, audio engineers and app developers on the side?

But really, it’s become a Apple pundit trope. It’s the exact same for all of these types of articles and it’s not true that pro-level apps are not available.

For writers like Michael there is a wide range of word processor apps. For general office work there are Apple’s own iWork offerings as well as Microsoft’s Office and lots of other excellent text and markdown apps.

Later in the article in further mentioning “pro” apps that are on the Mac but not the iPad he writes: “everything from Adobe’s creative applications to Microsoft’s Visual Studio, or for that matter, Apple’s own app-development platform, Xcode.”

See, these are probably not apps Michael needs at all. And I’d guess that it’s a very small group, proportionally, that needs Xcode or Visual Studio. The same for Final Cut Pro or Logic. Remember, the headline of this article is all about why Michael had to give up the iPad. He never actually says anything at all about his personal use case. This is really more about doing a hit piece on the iPad because that’s a popular thing to do.

But let’s play along a little further. He specifically calls out Adobe as missing. Weird. I’ve got the App Store open and Adobe Illustrator for iPad has 26,000 ratings with an average of 4.6. Photoshop has 52,000 ratings, average of 4.4. There’s also Lightroom with 31,000 ratings, 4.8 average, Premiere Rush, 107,000 ratings, 4.6 average and Fresco 30,000 ratings, 4.7 average. Also available is Adobe Acrobat for editing pdfs (there are lots of pdf editors). Wow, if only Adobe had some iPad apps maybe we could get something done over here.

Now, to be clear, I don’t use these apps and from skimming reviews these apps are not yet equal to their desktop counterparts. Even so, they have many thousands of reviews with a decent average rating. So, it would appear at least some out there find them useful. And in the end it’s on Adobe to develop these apps.

But what Michael does not acknowledge anywhere in the article is the fact that over the past several years many third party developers have worked very hard to bring pro level apps to the iPad. Before Adobe got in the game Serif brought Affinity Designer and Photo to the iPad several years ago. And with their most recent 2.0 update they’ve also brought Publisher into the suite for iPad. It’s a full on, fully capable competitor to Adobe’s InDesign and one of my most used apps.

Another noticeable pro-level app is DaVinci Resolve, a well regarded video editor was recently released for the iPad and another high quality video editor is LumaFusion which has been available for several years. Very well regarded video editing apps but ignored by Michael. Not surprising given that the existence of all these apps (and many, many more) are counter to the Apple Pundit narrative that there are no pro-level apps available for the iPad.

But he jumps into another popular meme which is less about the iPad and more about Apple’s supposedly terrible relationship with developers. That goes beyond the scope of post but I did want to point out that to make his argument Michael cites a Twitter post by a developer who’s having issues with a game:

If Apple plans to make the iPad a truly capable device with first-class apps, it's going to need to take developer relationships a lot more seriously. Developers should not have to appeal on social media to get Apple's attention on highly subjective App Store rules and whims.

So, to be clear, the App Store and developer relations are an iPad problem and the one anecdote he points to, citing “first-class apps”, is a game.

Let’s move on.

Another major issue is that the user interface, based on iOS, has become increasingly complicated over time. With each new release of iOS, Apple has added features and functionality, making the operating system more powerful but more complex. This has made it more difficult for users to find the features they need, leading to confusion and frustration.

Okay, now we’re getting into the complaints of iPadOS. A common complaint from primarily Mac using pundits is that iPadOS too limited, too simple. But here Michael is suggesting that it’s too complicated, with too many features and too much functionality. My head is spinning and I think I’ve got whiplash. How is iPadOS simultaneously too basic and not powerful enough and yet it is too complicated with added features and functionality.

And remember, by his own admission, Michael hardly ever touches his iPad. And yet he expects to know how to use the new features? Is this supposed to happen via osmosis when he touches his iPad? Look, if you want a more powerful, more capable OS you will have to take at least a little time learning those new features as they are added. Some actual effort is needed to learn how to use a more complicated, powerful device.

You know what else requires a new user to spend some time learning? A Mac. A new Mac user has to take time to explore and become familiar with the features. As iPadOS becomes more capable users that want to take advantage will have to do the same.

Some users will just use the more basic functionality that’s been present in the iPad for the past 13 years. My parents, both iPad users, have no idea that split screen exists, they’ve never used that feature. Using two windows of the same app side by side? Not a thing for them. What’s multi-tasking? They just happily use the very simple iPad. That’s the balance Apple continues to maintain for different users.

Michael then discusses yet another “problem” of the too simple, too complicated iPad: gestures. Yes, it’s true, the iPad started life as a touch screen computer! That meant learning gestures. 13 years ago they were simpler and over the years more have been added. Does Michael expect that a new user should just be able to pick up the iPad and by virtue of touching it they would know all of these gestures?

Here are just a few examples. The iPad supports a wide variety of gestures, including swipes, pinches, and taps, that can be used to navigate and interact with apps. But many users find these gestures hard to learn, and they are not apparent without some explanation or guidance beyond what the iPad offers.

Let’s be clear, these are all the same gestures that one uses with an iPhone but we don’t see an outcry about how hard the iPhone is to use! So weird that it’s only a problem on the iPad.

Somehow, my 80 year old granny who’s only computer use prior to her iPad was playing solitaire on an old Windows PC was able to manage her iPad within a couple hours of practice sitting next to a family member. Within a few days she was happily sending email, posting to Facebook, getting games, sending messages. The same for several others in my family that had never used a desktop computer. Did they pick up their iPad and suddenly know how to use it? Actually, those that had iPhones did. They got it instantly. Others needed a little guidance in the first few hours. But all became proficient in the basic, essential gestures within their first day or two.

But you know, for anyone that might need some tips or reminders going forward, there’s the internet. A search for iPad gestures yields this page from Apple.

Ever forward, we must push forward. We’re getting close to the end.

While the iPad is highly customizable, the process of customizing settings and preferences is more than a bit arcane. Configuring things like the "dock," the "control center," and your notifications can take some time to figure out, and the options are buried levels deep under settings.

Yeah, this isn’t the problem it’s being made out to be. This is called learning something new and it’s not specific to the iPad. Again, everything Michael mentions here is also true of the iPhone. Certainly, diving into the more powerful features of iPadOS, should a user want them, does require more effort, more time to explore and learn. Just as they might need to do on the Mac.

But at this point these “problems” of the iPad are greatly exaggerated and in some cases, not at all specific to the iPad. But, you know, juicy headline and all that.

Stage Manager, the iPad's new showcase feature for multitasking, isn't even turned on by default. It's hidden away — and for good reason. It's difficult to use, and I would need to write a full-length review to describe just how truly awful my experience with it has been.

It is off by default. Again, Apple sides with simplicity to cater to the larger pool of users that likely don’t want or need Stage Manager and probably have not even learned of its existence. That’s good. The “pro” users will have learned of Stage Manager (advanced users are those that pay attention to Apple-related news, Apple’s website, WWDC announcements, etc). They will have learned about Stage Manager and will turn it on when they’re ready to check it out.

But hard to use? No, it’s not. It’s really not. Is it more complicated than the previous windowing offered on the iPad. Of course, by definition, yes. It’s a new feature that does more, has more options. So yes, it does require at least a little effort, a little practice. But again, this is an advanced feature for advanced users. It should be expected that some effort will be involved. I’m not special, no super powers. I turned on Stage Manager and got the basics within minutes. With a little practice I was comfortable and having no problems other than the instability and oddities that came with running a beta. By the time of the final release it was vastly improved and much more usable.

An in-depth review from MacStories' Federico Viticci tells you all you need to know: He spends most of it venting about his inability to get the feature to work without crashing his whole system.

No. No, his review does not tell us all we need to know. Look, Federico is known as the iPad guy. It’s a part of his online brand and how he earns his living. It’s beyond the scope of this post but it’s become a meme that the largely Mac-using Apple Punditry point to Federico as the exception to the rule. The stubborn guy who has to work extra hard to make the iPad work for him. “You can’t get real work done on an iPad” is a decade old meme and Federico is the most known Apple pundit fighting that fight. All the while being told: Just get a Mac! It’s become a part of the iPad story for those that listen to Apple/tech podcasts or read the websites.

There’s a lot to unpack with Federico, his role of championing the iPad in this sphere and his current take on the iPad. Again, not really the subject of this post so I’ll leave it.

The specific point I’d make is that Michael calls forth Federico as the sole authority who’s opinion is all we need to know and that’s ludicrous. I’ve got a news flash for Michael, there are likely many thousands of iPad users who are not Federico, who are not podcasters, who do not have his tastes or preferences and those users might well be very happy with Stage Manager. I know I am.

Okay, winding down with the last bit of the article in which Michael worries about the future of the iPad should Apple make a touch screen Mac.

A touchscreen MacBook would kill the iPadPresumably, it would arrive as a touchscreen-keyboard situation, a form factor that’s more familiar and traditional for laptop users. This would make the iPad practically obsolete. Virtually anything one could do on an iPad would now be possible on a Mac, while the reverse would not be true.

Again, spoken like an Apple pundit who apparently has no family members that are not just like him. Does it really not occur to him that maybe some of the users of hundreds of millions of iPads that have been sold over the past 13 years would be better served by an easier to use iPad than they would a Mac? Mr. Gartenberg I’d like to introduce you to my granny, my aunt, my uncle, my mom, my dad, my sister… all of them iPad users, none of them Mac users.

Speaking for myself though, I don’t want a laptop. Some Mac users seem to find it hard to comprehend than some of us using the iPad do not want a laptop. Sometimes I do want a laptop like experience and that’s when I dock my iPad to a keyboard. That’s it, laptop mode achieved. But other times I want my iPad just a screen to be hand held or propped up on a kickstand. Nor do I want macOS. I used the Mac from 1993 till 2016 at which point I came to prefer the iPad.

So, no, I don’t think a touchscreen Mac (only a rumor at this point) will kill the iPad.

Apple pundits, please, please, do some original thinking and stop with the clickbait. If you feel that drawn to write about the iPad how about doing some quality tech journalism that harkens back to the late 90s when we could get real reviews of software instead of this stream of despair and outrage.

While the Files app on the iPad did start out as a pretty basic app that was far short of the Finder on the Mac it’s come a long way since then. With each version of iPadOS it has gained new features, slowly bringing it closer to the Finder. Exploring the Files App on iPadOS 16